AI Bias Causes Problems for Underrepresented Groups

November 20, 2020

“Whether many recognize it or not, bias is a pervasive aspect of human thoughts and experiences. However, what was once strictly viewed as a fundamental human flaw has been passed over to a completely different species: artificial intelligence (AI). As AI capabilities increase and become more efficient in learning about the world, the capacity for AI to incorporate biases into its analysis and judgement systems increases as well.

In its simplest form, artificial intelligence is judgement and understanding exhibited by machines, as opposed to natural intelligence, which is exhibited by humans and animals. Artificially intelligent machines typically do more than they were originally programmed to do, and display a capacity to receive and interpret new information they are exposed to.

This capacity for machines to learn and improve themselves without definitive programming is known as machine learning, and is the most common application of AI. With the application of machine learning comes the goal to simulate human thoughts and decisions, along with humanity itself. A Hong Kong-based AI and robotics company, Hanson Robotics, even featured one of the first attempts to replicate an actual human in 2016 with their creation of the social humanoid robot, Sophia.

✨ Robotic Chic ✨

Remember this? Check out my full story in @BazaarArabia: https://t.co/6liV7boDaS #TBT pic.twitter.com/RtqvbRQN11

— Sophia the Robot (@RealSophiaRobot) May 28, 2020

As programmers continue to replicate humanity in artificially intelligent machines, they also run the risk of replicating their own unconscious biases. Despite the fact that technology is generally viewed as strictly fact-based and objective, this expectation does not always hold true when machines gain the ability to act beyond their programming and decipher information on their own.

“A lot of the biases in machine learning & AI come from the actual data being used to train the models. We have biases in the data we use, and the people writing the programs have some of their personal biases as well; a programmer may be coding their own implicit biases into a model without being aware of it,” said Computational and Applied Mathematics major Samantha Stagg (A‘17).

Because many AI algorithms rely on data provided by humans, their potential to learn and absorb information can be impacted by human error. If a human programmer does not provide broad and widely sourced information or data that displays variety, they risk passing their limited knowledge to their machines. For example, in 2015, multinational tech giant Amazon realized that its hiring algorithm was biased in favor of men’s resumes.

The model was trained to screen applicants by observing and recognizing patterns in resumes submitted throughout the last decade. While this seemed like a thorough way to train the AI model, there was one issue: a majority of the resumes came from men. Because the AI model did not receive a balanced set of resumes, it trained itself to prefer male candidates while downgrading resumes that lacked common features in men’s resumes or contained the word “women’s.”

The dangers of relying on Artificial Intelligence to make hiring decisions highlighted by difficulties with Amazon’s recruiting tool which developed a gender bias! https://t.co/jvVjfcxds6 #HR #Equality #FutureofWork

— CIPD HR-inform (Ireland) (@HRinform_ROI) October 12, 2018

“Stopping gender bias in society and technology will take lots and lots of work because it’s so rooted into a large portion of society. Through women emerging into all different types of places, though, it will definitely help to eliminate these biases. However, it’s not going to be a short process- it’s going to be a long process of working up to it,” said Grace Cronen (‘21).

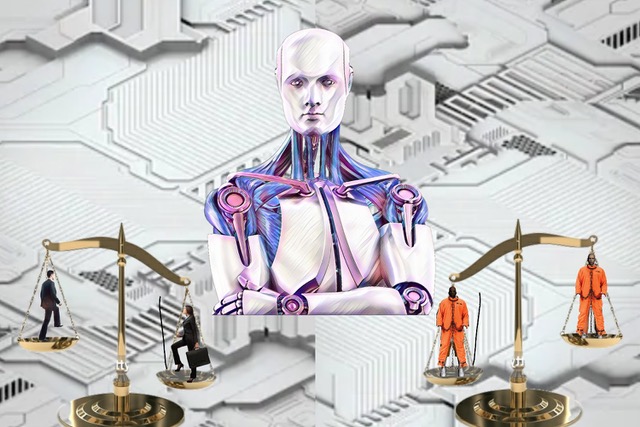

These biases are not limited to gender either; racial minorities have encountered this issue as well. Criminal risk assessment algorithms are the most commonly used form of AI in the U.S. justice system, designed to predict an offender’s likelihood of recidivism. They receive data about the offender such as their gender, race, age, and socioeconomic status, and use this data to predict the percent chance of them reoffending.

Though these algorithms are favored for their lack of human involvement and therefore lack of partiality, the AI algorithms eventually begin to inject biases obtained from the data into their predictions. These errors particularly target racial minorities and low-income individuals, resulting in harsher sentences and bail decisions. Such discriminatory tendencies of AI algorithms have begun to be referred to as the New Jim Code, a form of systemic discrimination in technological systems.

“Many of the programmers involved in artificial intelligence are white men, so the algorithms will all be based on their decisions, their emotions, and their perspective. This can create bias against both women and minorities because of their lack of involvement and programmers’ perceptions of them. Groups of programmers need to include many types of people,” said Kaela Ramos (‘21).

the use of algorithms for "risk assessment" in the criminal justice system is almost completely unaccounted for in judicial oversight, and IMO this should alarm anyone who has worked on or used a website in the last 10 years. https://t.co/pfSDbsTKvM

— hana (@HanaCarpenter) February 7, 2020

Though bias is prevalent in systems with machine learning, there are ways to prevent it. First, programmers must utilize the relative objectivity of the machines they program. Here, AI differs from humans in the way that humans may be reluctant to share their views or be unconsciously biased. In a program, it is much easier to see what information the machine disregarded, as well as the reason it was removed. Taking advantage of this aspect of programs is essential to eliminating bias.

Next, programmers should take care to expose AI systems to data that represents ideal conditions rather than conditions as they are in society. Because bias is so widespread in the world, introducing AI to randomly sampled data from society allows it to learn from and intake society’s biases. By being proactive, programmers can ensure that data represents everything equally and is non-discriminatory in nature. Finally, programmers should continue to build upon their existing knowledge of bias in artificial intelligence systems in order to further improve machine learning systems and curb the development of artificial biases.

As part of our efforts to bring more people from different backgrounds into AI, we are now partnering with Georgia Tech’s College of Computing to pioneer a first-of-its-kind educational model. Learn more here: https://t.co/OLMxCSgNC4 pic.twitter.com/bYfQ7YuDhP

— Facebook AI (@facebookai) October 22, 2020

“We need to make sure the data we use to train our models doesn’t have any inherent biases. Having standards requiring that any unfair biases in data be removed before being used to generate models would help a lot. I also think that diversifying Silicon Valley and other industries using big data would help. Having more minority programmers writing the code to generate these models would help to remove the human bias in the industry,” said Stagg (A‘17).